About the Workshop

Welcome to the 1st International workshop on Next-Gen Middleware for MLOps in Distributed Systems (MIND). MIND will be hosted in conjunction with the 26th ACM/IFIP International Middleware Conference conference, which will be held in Vanderbilt University, Nashville, TN, USA from 15th – 19th December 2025.

This workshop aims to bring together researchers, practitioners, and industry stakeholders to explore middleware innovations that support MLOps in distributed systems. It will focus on practical solutions to real-world challenges in orchestrating end to end ML pipelines, from data collection to model deployment and continuous monitoring in dynamic, heterogeneous, and resource constrained environments.

Call for Papers

Machine Learning Operations (MLOps) is a set of practices that combines machine learning, DevOps, and data engineering to streamline the end to end lifecycle of ML systems. It covers the full spectrum of activities involved in operationalizing ML, such as data collection, preprocessing, model training, validation, deployment, monitoring, and continuous improvement, ensuring scalability, reliability, and efficiency in production environments.

However, challenges such as large-scale data transfer to cloud, limited bandwidth, latency, and privacy concerns have increased the need for effective MLOps in distributed systems. In these settings, ML workflows must be managed and automated across a combination of cloud, fog, and edge environments. Implementing MLOps in such hybrid infrastructures requires addressing the inherent complexity of distributed pipelines while maintaining system performance, model reliability, and data security.

To meet these demands, middleware has emerged as a critical enabler. Middleware provides a layer of abstraction and coordination that simplifies the deployment, monitoring, and management of ML models across distributed components. It handles resource discovery, workload scheduling, communication management, and fault tolerance, while also integrating with tools for version control, experiment tracking, and model retraining.

We invite submissions on a wide range of topics including, but not limited to:

Topics of Interest

- Middleware-supported communication protocols for scalable and efficient MLOps across distributed systems

- Middleware for dynamic resource management and orchestration in heterogeneous ML environments

- Middleware-driven model compression and optimization for efficient deployment in resource-constrained settings

- Middleware solutions for deploying, scaling, and monitoring ML workloads across hybrid infrastructures

- Lightweight and energy-efficient middleware frameworks for sustainable MLOps

- Trustworthy and privacy-preserving middleware systems for secure ML operations

- APIs for middleware-based development of distributed MLOps applications

- Middleware-supported migration and fault-tolerant mechanisms for robust ML service availability

- Middleware support for federated learning and decentralized model training workflows

Important Dates

-

Paper Submission Deadline:

September 24, 2025Extended Deadline: October 12, 2025 - Notification of Acceptance: October 30, 2025

- Workshop Papers Camera-Ready: November 09, 2025

*All deadlines are Anywhere on Earth (AoE).

Submission Guidelines

Authors are invited to submit original and unpublished work, which must not be submitted concurrently for publication elsewhere, in the following format:

- Full paper (6 pages): Interesting, novel results or completed work on topics within the scope of the workshop.

- Short paper (4 pages): Exciting preliminary work or novel ideas in their early stages.

- Poster (2 pages): Working, presentable systems or brief explanations of a research project.

The page length includes figures, tables, appendices, and references. The font size has to be set to 9pt. Papers exceeding this page limit or with smaller fonts will be desk-rejected without review. Submitted papers must adhere to the formatting instructions of the ACM SIGCONF style, which can be found on the ACM template page.

For each accepted paper, at least one author is required to register and attend the workshop in-person to present their poster/paper on-site. The Middleware 2025 conference proceedings will be published in the ACM Digital Library.

Submission Portal: Submit papers via HotCRP.

Organizing Committee

General Chairs

Program Committee Members

- Rajiv Ranjan: Newcastle University, UK

- Omer Rana: Cardiff University, UK

- Aditya Indoori: Amazon, USA

- Ajay Nagrale: Meta, USA

- Sajjan AVS: Amazon, USA

- Martin Higgins: Canadian Nuclear Labs, Canada

- Ali Raza: Honda Research Institute Europe, Germany

- Neetesh Kumar: IIT Roorkee, India

- Francesco Piccialli: University of Naples Federico II, Italy

Workshop Program

The MIND workshop will take place on December 15, 2025 in a hybrid mode. Below is the detailed schedule of the workshop. Please note that the time mentioned is based on the local time in Nashville, TN, USA.

Keynote Session

Keynote Title: Safeguarding Artificial Intelligence.

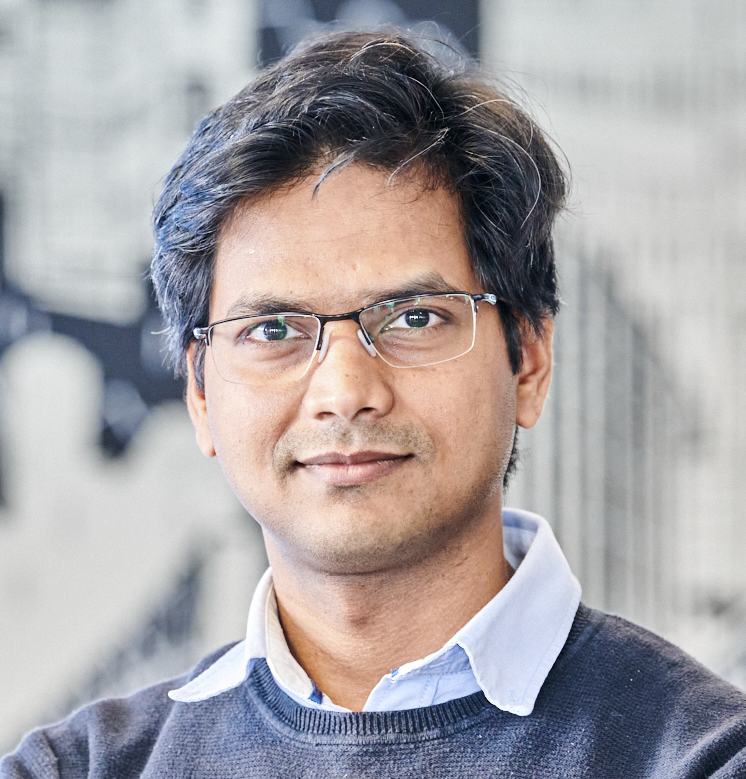

Dr. Varun Ojha, Associate Professor in Artificial Intelligence at the School of Computing, Newcastle University, UK.

Prof. Varun Ojha

is a Senior Lecturer (Associate Professor) in Artificial Intelligence at the School of Computing, Newcastle University. He is an Artificial Intelligence Theme Leader and a Co-Investigator on the EPSRC-funded National Edge AI Hub . He works in Trustworthy Artificial Intelligence: Deep Learning, Neural Networks, Machine Learning, and Data Science. In the past, Dr Ojha served as a Lecturer (Assistant Professor) in Computer Science at the University of Reading , UK, and as a Postdoctoral Fellow at the Swiss Federal Institute of Technology (ETH Zurich) , Zurich, Switzerland. Before this, Dr Ojha was a Marie-Curie Fellow (funded by the European Commission) at the Technical University of Ostrava , Czech Republic. For more information, please visit his personal website at: ojhavk.github.io .Abstract: Artificial Intelligence (AI) algorithms have become an inevitable part of our lives and are so pervasive that users often engage with them unconsciously. Both users and systems contribute vast amounts of data to train these algorithms. AI addresses a wide range of critical and sensitive problems, from medical diagnostics and climate change mitigation to assisted driving and financial technologies. However, AI systems have advantages and drawbacks. One significant concern is their security, as they are vulnerable to sophisticated malicious attacks, unintentional changes, omissions of context in training data, sensor aging, changes in the training environment, and other unforeseen circumstances. This makes AI applications at the edge—such as smartphones and cars—susceptible to defects in training data and AI models. Safeguarding AI focuses on safeguarding data integrity and the quality of learning associated with AI algorithms when they are exposed to cyber-attacks in edge computing environments and federated learning. Thus, this talk focuses on the security of AI systems on the edge.

Paper 1 (on-site): Automated Dynamic AI Inference Scaling on HPC-Infrastructure: Integrating Kubernetes, Slurm and vLLM

Authors: Tim Trappen (Ruhr University Bochum, Germany), Robert Keßler (University of Cologne, Germany), Roland Pabel (University of Cologne, Germany), Viktor Achter (University of Cologne, Germany), Stefan Wesner (University of Cologne, Germany)

Abstract: Due to rising demands for Artificial Inteligence (AI) inference, especially in higher education, novel solutions utilising existing infrastructure are emerging. The utilisation of High-Performance Computing (HPC) has become a prevalent approach for the implementation of such solutions. However, the classical operating model of HPC does not adapt well to the requirements of synchronous, user-facing dynamic AI application workloads. In this paper, we propose our solution that serves LLMs by integrating vLLM, Slurm and Kubernetes on the supercomputer RAMSES. The initial benchmark indicates that the proposed architecture scales efficiently for 100, 500 and 1000 concurrent requests, incurring only an overhead of approximately 500 ms in terms of end-to-end latency.

Coffee Break & Networking

3:00 pm - 3:30 pm

Paper 2 (Virtual): Fine-grained Fault Tolerance in Distributed Training Toolkits using the Syndicated Actor Model

Authors: Rutger de Groen and Tony Garnock-Jones, Maastricht University Maastricht, Netherlands

Abstract: Deep learning (DL) toolkits like TensorFlow and PyTorch provide distributed training strategies, but they have limited support for fault tolerance. They both provide the user with built-in checkpoint functionality to continue training from a restart; however, when a partial failure is detected, all ongoing training jobs are aborted and restarted, repeating work and wasting resources. In response, we explore use of the Syndicated Actor Model (SAM), a recent model of distributed computation, to coordinate activities in a distributed training environment. The SAM encourages expression of distributed systems in terms of the joint state of a shared activity instead of in terms of point-to-point message exchange. This change in perspective has the potential to improve task distribution, resilience to failure, and efficient use of resources in distributed training scenarios.

Paper 3 (Virtual): Diverse Minimum Spanning Trees

Abstract: Spanning trees are fundamental structures in graph analysis and network design, yet conventional algorithms prioritize only edge weights or connectivity, often neglecting other structural attributes. In many real-world networks, edges carry additional labels—such as transport modes, communication channels, or data sources—that affect the interpretation and fairness of the network. We introduce DMST, a diversity-aware variant of Kruskal’s algorithm that jointly optimizes cost and diversity through a tunable parameter. To the best of our knowledge, this is the first MST algorithm to consider edge-diversity optimization. We evaluate the approach across synthetic graphs, as well as the London Transport Network dataset. Results demonstrate a clear and controllable trade-off: improving the edge-class balance within the spanning trees incurs an increase in total edge weight. Our findings establish a principled framework for diversityaware spanning tree construction, bridging classical graph optimization with fairness and representation objectives. This formulation opens new avenues for equitable network design, multi-modal transport planning, and diversity-regularized graph learning.

Contact Us

For any inquiries regarding the workshop, please contact MIND chairs at: